Configuring Amazon Security Lake Connectors

This connector allows Stellar Cyber to ingest logs from the Amazon Security Lake and add the records to the Stellar Cyber data lake.

Integration with Amazon Security Lake enables organizations to securely store and manage their security data, providing real-time threat detection and response capabilities through Stellar Cyber's advanced security analytics and machine learning algorithms.

This connector uses the standardized format for Open Cybersecurity Schema Framework (OCSF). The data in OCSF format, which is sent and pulled by third party vendors, is stored in the Amazon Security Lake. This connector is a subscriber integration that pulls data from the lake.

Connector Overview: Amazon Security Lake

Capabilities

-

Collect: Yes

-

Respond: No

-

Native Alerts Mapped: No

-

Runs on: DP

-

Interval: Configurable

Collected Data

|

Content Type |

Index |

Locating Records |

|---|---|---|

|

OCSF-formatted data from |

Syslog |

Domain

N/A

Response Actions

N/A

Third Party Native Alert Integration Details

N/A

Required Credentials

-

AWS Role ID, External ID, and SQS Queue URL

Let us know if you find the above overview useful.

Adding an Amazon Security Lake Connector

To add an Amazon Security Lake connector:

- Obtain Amazon Security Lake credentials

- Add the connector in Stellar Cyber

- Test the connector

- Verify ingestion

Obtaining Amazon Security Lake Credentials

This connector uses Amazon Simple Queue Service (SQS) queues and S3 buckets to pull data from the Amazon Security Lake.

Follow guidance on Amazon Security Lake documentation.

Permissions

You need the following permissions to create the SQS queue:

-

s3:GetBucketNotification

-

s3:PutBucketNotification

-

sqs:CreateQueue

-

sqs:DeleteQueue

-

sqs:GetQueueAttributes

-

sqs:GetQueueUrl

-

sqs:SetQueueAttributes

When you set up data access for the Amazon Security Lake subscriber in the Enabling Subscriber Data Access procedure, the SQS queue is automatically created.

You will also need the following permissions to perform actions:

-

iam:CreateRole

-

iam:DeleteRolePolicy

-

iam:GetRole

-

iam:PutRolePolicy

-

lakeformation:GrantPermissions

-

lakeformation:ListPermissions

-

lakeformation:RegisterResource

-

lakeformation:RevokePermissions

-

ram:GetResourceShareAssociations

-

ram:GetResourceShares

-

ram:UpdateResourceShare

Use IAM to verify your permissions. Review the IAM policies that are attached to your IAM identity. Then, compare the information in those policies to the list of (permissions) actions that you must have to notify subscribers when new data is written to the data lake.

Enabling Subscriber Data Access

To enable subscriber data access:

-

Log in as an administrative user to the Security Lake console at https://console.aws.amazon.com/securitylake.

-

Use the AWS Region drop-down to choose a Region in which to create the subscriber.

-

Choose Subscribers.

- Click Create subscriber.

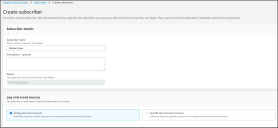

- For Subscriber details, enter a Subscriber name. The suggested Subscriber name is StellarCyber. You can also enter an optional Description.

The Region is automatically populated as your currently selected AWS Region. It cannot be changed.

- For Log and event sources, select All log and event sources.

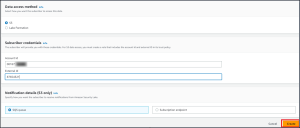

- For Data access method, choose S3.

- For Subscriber credentials, enter Account Id and External Id.

- The Account Id is the ID of the AWS account on which Stellar Cyber is running.

-

The External Id is a unique identifier. The suggested External Id is the Stellar Cyber tenant ID of the tenant running the connector. To find your tenant ID, navigate to System | Administration | Tenants and locate it in the table.

- For Notification details, choose SQS queue.

-

Click Create.

-

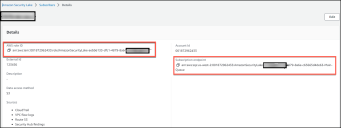

Navigate to Subscribers | Details. Copy the AWS role ID and the Subscription endpoint.

-

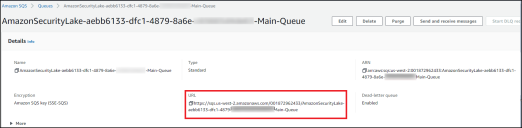

Navigate to Amazon SQS | Queues.

-

For the specific Subscription endpoint noted above, copy the SQS queue URL.

Adding the Connector in Stellar Cyber

With the access information handy, you can add an Amazon Security Lake connector in Stellar Cyber:

-

Log in to Stellar Cyber.

-

Click System | Integration | Connectors. The Connector Overview appears.

-

Click Create. The General tab of the Add Connector screen appears. The information on this tab cannot be changed after you add the connector.

The asterisk (*) indicates a required field.

-

Choose Web Security from the Category drop-down.

-

Choose Amazon Security Lake from the Type drop-down.

-

For this connector, the supported Function is Collect, which is enabled already.

-

Enter a Name.

This field does not accept multibyte characters.

-

Choose a Tenant Name. This identifies which tenant is allowed to use the connector.

-

Choose the device on which to run the connector.

-

(Optional) When the Function is Collect, you can create Log Filters. For information, see Managing Log Filters.

-

Click Next. The Configuration tab appears.

The asterisk (*) indicates a required field.

-

Enter the AWS Role ID you noted above in Obtain your Amazon Security Lake credentials.

-

Enter the External ID you used above.

-

Choose the Region from the available AWS regions in the drop-down.

-

Enter the SQS Queue URL you noted above.

-

Choose the Interval (min). This is how often the logs are collected.

-

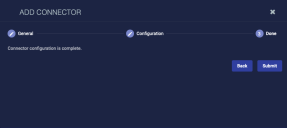

Click Next. The final confirmation tab appears.

-

Click Submit.

To pull data, a connector must be added to a Data Analyzer profile if it is running on the Data Processor.

The new connector is immediately active.

Testing the Connector

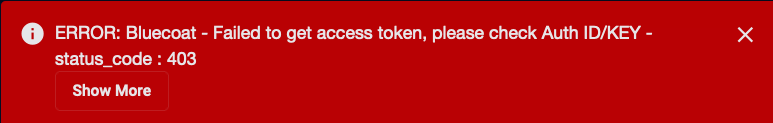

When you add (or edit) a connector, we recommend that you run a test to validate the connectivity parameters you entered. (The test validates only the authentication / connectivity; it does not validate data flow).

For connectors running on a sensor, Stellar Cyber recommends that you allow 30-60 seconds for new or modified configuration details to be propagated to the sensor before performing a test.

-

Click System | Integrations | Connectors. The Connector Overview appears.

-

Locate the connector that you added, or modified, or that you want to test.

-

Click Test at the right side of that row. The test runs immediately.

Note that you may run only one test at a time.

Stellar Cyber conducts a basic connectivity test for the connector and reports a success or failure result. A successful test indicates that you entered all of the connector information correctly.

To aid troubleshooting your connector, the dialog remains open until you explicitly close it by using the X button. If the test fails, you can select the button from the same row to review and correct issues.

The connector status is updated every five (5) minutes. A successful test clears the connector status, but if issues persist, the status reverts to failed after a minute.

Repeat the test as needed.

Verifying Ingestion

To verify ingestion:

- Click Investigate | Threat Hunting. The Interflow Search tab appears.

- Change the Indices to Syslog. The table immediately updates to show ingested Interflow records.

One connector can have several different msg_origin_source and msg_class values. The msg_origin_source and msg_class are assigned values dynamically based on the incoming third party data:

-

The default

msg_origin_sourceisamazon_security_lake. A specific example iscloudtrail. -

The

default msg_classisamazon_security_lake_log. A specific example ismanagement_data_and_insights.