Adding an Azure Data Sink

You must have Root scope to use this feature.

You can add an Azure data sink from the System | Data Processor | Data Sinks page using the instructions in this topic. Adding an Azure data sink consists of the following major steps:

- Create a container in Azure to use as the destination for the data sink.

- Create a SAS token for the container in Azure.

- Add the Azure data sink in Stellar Cyber.

Use our example as a guideline, as you might be using a different software version.

Azure Blob or Azure Data Lake Storage Supported

You can point your Azure data sink at a container in either Azure Blob storage or Azure Data Lake storage. Both are supported and the procedures for creating the container in Azure, generating the SAS token, and adding the data sink in Stellar Cyberare the same.

Refer to Upgrade Azure Blob Storage with Azure Data Lake Storage Gen2 capabilities for information on moving an existing blob-based data sink to Azure Data Lake storage.

Creating a New Container in Azure

To create a new container in Azure you must use an administrator account with full permissions:

-

Log in to Azure.

-

Navigate to Storage accounts | [YourStorageAccount] | Blob service | Containers.

-

Click + Container.

-

Create the new container.

Leave NFS v3 disabled as Azure NFS v3 does not support blob storage APIs.

-

Copy the name of the container you created. You will need this when adding the Data Sink in Stellar Cyber.

-

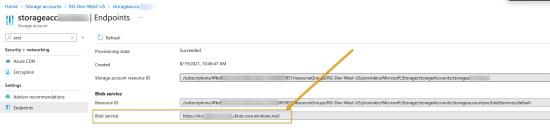

Navigate to Storage accounts | [YourStorageAccount] and select the Settings | Endpoints option in the left navigation panel.

-

Copy the value shown for Blob service, as in the figure below. You will need this when adding the Data Sink in Stellar Cyber.

Generating the SAS Token in Azure

Stellar Cyber's access to the Azure data sink is authenticated using a SAS token created in Azure. You can create tokens at the container level or at the account level in Azure. In this example, we create a SAS token at the account level.

-

Log in to Azure.

-

Navigate to Storage accounts | [YourStorageAccount].

-

Select the Security + networking | Shared access signature option in the left navigation panel.

-

Make sure that all Allowed services are checked.

-

Make sure that at least the Container and Object boxes are checked under Allowed resource types.

-

Under Allowed permissions, everything is required except Immutable storage.

-

Check the Enables deletion of versions option.

-

Make sure that both Read/Write and Filter are enabled for Allowed blob index permissions.

-

Set a Start and Expiry date/time that is at least as long as the amount of time you expect to use this data sink. The SAS token must remain valid for the entire time you want to write data to this Data Sink.

-

Set Allowed protocols to HTTPS only.

The figure below illustrates these options:

-

When you have finished making these settings, click the Generate SAS and connection string option.

-

Copy the value returned by Azure in the SAS Token field. You will need this value when you add the Data Sink in Stellar Cyber.

Adding the Azure Data Sink in Stellar Cyber

To add an Azure Data Sink:

- Click System | Data Processor | Data Sinks. The Data Sink list appears.

-

Click Create. The Setup Data Sink screen appears.

- Enter the Name of your new Data Sink. This field does not support multibyte characters.

-

Choose AzureBlobfor the Type.

Additional fields appear in the Setup Data Sink screen:

- Use the Endpoint option to specify the endpoint where the destination container is located. You identified the endpoint for your Azure storage account in Creating a New Container in Azure.

- Supply the name of the Azure container to be used as the destination for this Data Sink in the Container field. You created the container in Creating a New Container in Azure.

-

Select the types of data to send to the Data Sink by toggling the following checkboxes:

-

Raw Data – Raw data received from sensors, log analysis, and connectors after normalization and enrichment has occurred and before the data is stored in the Data Lake.

-

Alerts – Security anomalies identified by Stellar Cyber using machine learning and third-party threat-intelligence feeds, reported in the Alerts interface, and stored in the aella-ser-* index.

-

Assets – MAC addresses, IP addresses, and routers identified by Stellar Cyber based on network traffic, log analysis, and imported asset feeds and stored in the aella-assets-* index.

-

Users – Users identified by Stellar based on network traffic and log analysis and stored in the aella-users-* index.

Alerts, assets, and users are also known as derived data because Stellar Cyber extrapolates them from raw data.

-

- Locate the SAS token you created in Generating the SAS Token in Azure and paste it into the SAS Token field.

-

Click Next.

The Advanced (Optional) page appears.

-

Specify whether to partition records into files based on their write_time (the default) or timestamp.

Every interflow record includes both of these fields:

-

write_time indicates the time at which the Interflow record was actually created.

-

timestamp indicates the time at which the action documented by the Interflow record took place (for example, the start of a session, the time of an update, and so on).

When files are written to the Data Sink they are stored at a path like the following, with separate files for each minute:

In this example, we see the path for November 9, 2021 at 00:23. The records appearing in this file would be different depending on the setting of the Partition time by setting as follows:

-

If write_time is enabled, then all records stored under this path would have a write_time value falling into the minute of UTC 2021.11.09 - 00:23.

-

If timestamp is enabled, then all records stored under this path would have a timestamp value falling into the minute of UTC 2021.11.09 - 00:23.

In most cases, you will want to use the default of write_time. It tends to result in a more cost-efficient use of resources and is also compatible with future use cases of data backups and cold storage using a data sink as a target.

-

-

Enable the Compression option to specify that records be written to the Data Sink in compressed (gzip) format.

For most use cases, Stellar Cyber recommends enabling the compression option to save on storage costs. Compression results in file sizes roughly 1/10th the size of uncompressed files.

-

You can use the Retrieve starting from field to specify a date and time from which Stellar Cyber should attempt to write alert, asset, and user records to a newly created Data Sink. You can click in the field to use a handy calendar to set the time/date

Note the following:

-

If you do not set this option, Stellar Cyber simply writes data from the time at which the sink is created.

-

This option only affects alert, asset, and user records. Raw data is written from the time at which the sink is created regardless of the time/date specified here.

-

If you set a time/date earlier than available data, Stellar Cyber silently skips the time without any available records.

-

-

Use the Batch Window (seconds) and Batch Size fields to specify how data is written to the sink.

-

The Batch Window specifies the maximum amount of time that can elapse before data is written to the Data Sink.

-

The Batch Size specifies the maximum number of records that can accumulate before they are sent to the Data Sink. You can specify either 0 (disabled) or a number of records between 100 and 10,000.

Stellar Cyber batches data to the Data Sink depending on whichever of these parameters is reached first.

So, for example, consider a Data Sink with a Batch Window of 30 seconds and a Batch Size of 300 records:

-

If at the end of the Batch Window of 30 seconds, Stellar Cyber has 125 records, it sends them to the data sink. The Batch Window was reached before the Batch Size.

-

If at the end of 10 seconds, Stellar Cyber has 300 records, it send the 300 records to the Data Sink. The Batch Size was reached before the Batch Window.

These options are primarily useful for data sink types that charge you by the API call (for example, AWS S3 and Azure). Instead of sending records as they are received, you can use these options to batch the records, minimizing both API calls and their associated costs for Data Sinks in the public cloud.

By default, these options are set to 30 seconds and 1000 records for Azure Data Sinks.

-

-

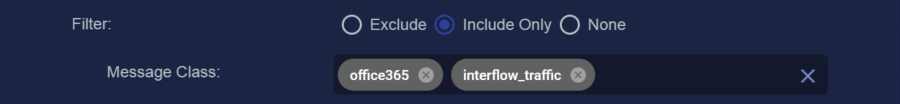

You can use the Filter options to Exclude or Include specific Message Classes for the Data Sink. By default, Filter is set to None. If you check either Exclude or Include, an additional Message Class field appears where you can specify the message classes to use as part of the filter. For example:

You can find the available message classes to use as filters by searching your Interflow in the Investigate | Threat Hunting | Interflow Search page. Search for the msg_class field in any index to see the prominent message classes in your data.

-

Click Next to review the Data Sink configuration. Use the Back button to correct any errors you notice. When you are satisfied with the sink's configuration, click Submit to add it to the DP.

- Click Submit.

The new Data Sink is added to the list.